Occlusion Aware Vehicle Detection

NN, YOLO, Localization.

Background: I did this project for the COMPSCI 682: Neural Networks: A Modern Introduction – Fall’24 course at UMass along with two other students. My contribution in this project was developing a two-stage detection pipeline integrating a ResNet-18 secondary classifier into YOLOv5m, enhancing detection accuracy by refining predictions and reducing false positives. Additionally, I created a custom dataset for training the secondary classifier, ensuring better differentiation between true and false positives, and demonstrated that our approach improves detection accuracy while maintaining computational efficiency across the YOLOv5 family.

Project Title: Occlusion-aware Module for 2D Object Detection for Autonomous Driving.

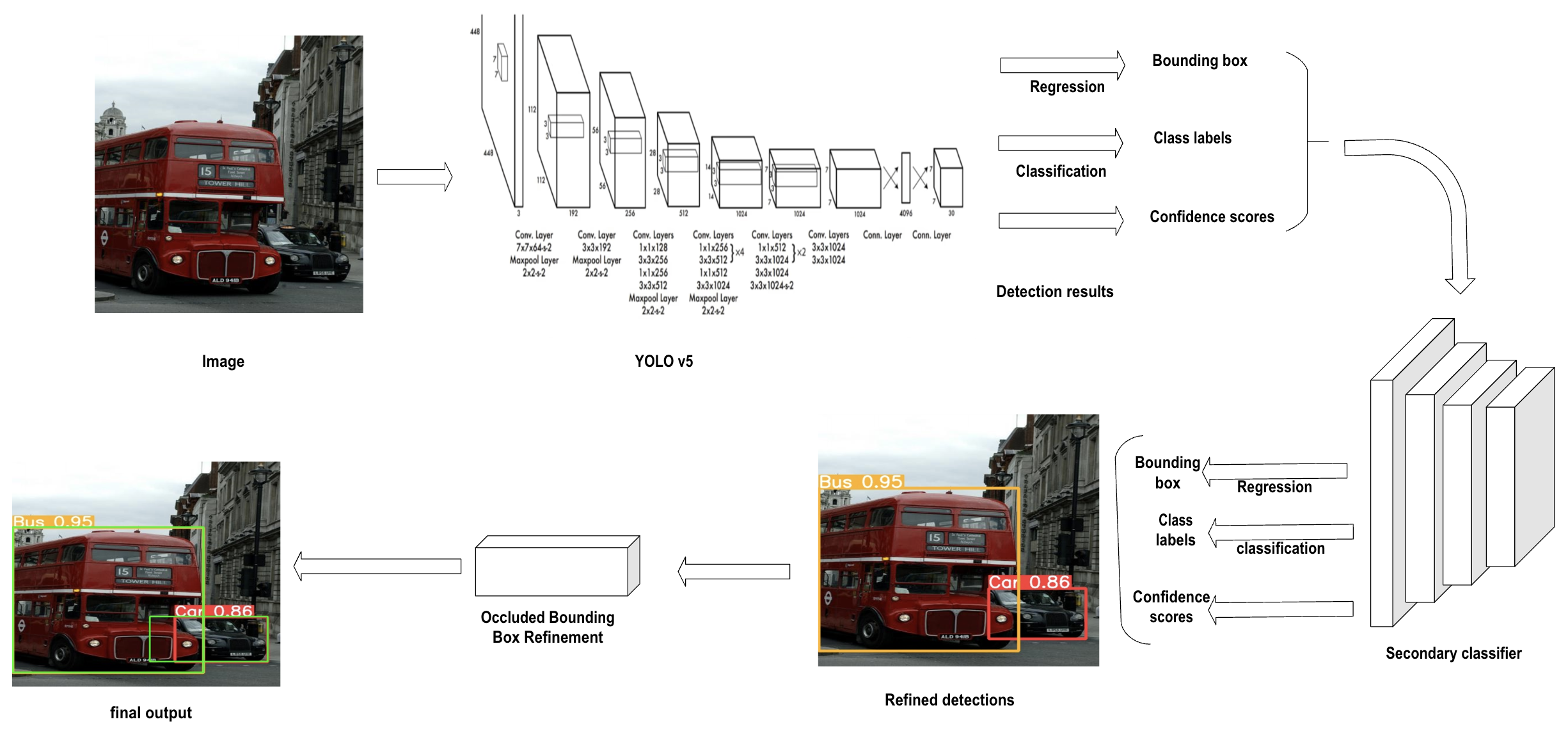

Project Overview: This project presents a two-stage detection pipeline that enhances YOLOv5m by integrating a ResNet-18 secondary classifier. Our approach refines detection predictions, reducing false detections while improving localization accuracy for occluded objects. Specifically,

- We designed a two-stage object detection system integrating ResNet-18 with YOLOv5m, improving localization accuracy and reducing false positives (improving mAP by 24.7%) in occluded scenarios.

- We fine-tuned YOLOv5m with occlusion-aware modules, achieving a 44.5% improvement in small-object detection in coco vehicle dataset.

Findings: Here are the major findings of this project:

-

The integration of a ResNet-18 secondary classifier into YOLOv5m reduced false positives and misclassifications, leading to a 24.6% increase in AP@IoU=0.5 (from 0.511 to 0.637).

-

The occlusion-aware bounding box enhancement method significantly improved localization accuracy for occluded objects without requiring model retraining.

-

The model achieved a 44.5% improvement in AP for small vehicles (from 0.236 to 0.341) and a 28.1% increase for medium vehicles (from 0.477 to 0.611).

-

Despite performance improvements, the model retained the computational efficiency of YOLOv5m, making it suitable for real-time applications.

-

The proposed model improved AR@[0.5:0.95] by 22.3% (from 0.557 to 0.681) and AR@IoU=0.75 by 23.3% (from 0.548 to 0.676), ensuring higher detection consistency across different vehicle categories.

-

While YOLOv5x achieved a slightly higher AP@IoU=0.5 (0.672), our model offers a competitive alternative with significantly lower computational costs, making it a better choice for real-time autonomous driving applications.