ICL Capabilities of LLMs

In-Context Learning, LLM, Huggingface, Chain-of-Thought (CoT).

Background: I did this project for the COMPSCI 685: Advanced Natural Language Processing – Spring’24 course at UMass along with four other students. My contribution in this project was exploring the in-context learning capabilities of different LLMs which is described in Section 7 in the report.

Project Title: Exploring the New Horizon of Sequence Modeling: Unveiling the Potentials and Challenges of Mamba.

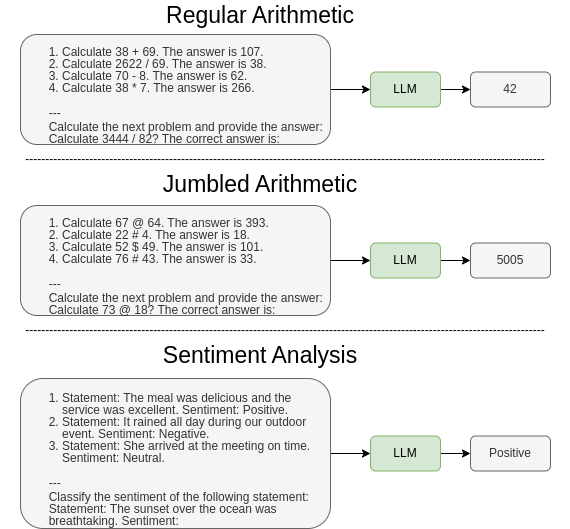

Project Overview: This evaluation explores the In-context learning (ICL) capabilities of pre-trained language models on arithmetic tasks and sentiment analysis using synthetic datasets. The goal is to use different prompting strategies—zero-shot, few-shot, and chain-of-thought—to assess the performance of these models on the given tasks. We conducted two types of arithmetic tasks:

- Regular Arithmetic: Involves basic arithmetic operations like addition, subtraction, multiplication, and division (using integer division).

- Jumbled Arithmetic: Involves standard arithmetic operations but with new symbols introduced for each operation:

- '$' represents addition, so a $ b equals a + b.

- '#' represents subtraction, so a # b equals a - b.

- '@' represents the operation (a + b) * (a - b), so a @ b equals this calculation.

Findings: Here are the major findings of this ICL analysis:

-

Mistral-7b consistently outperformed other models across all tasks, demonstrating robust performance irrespective of the demonstration type.

-

Cerebras-btlm-3b showed limited improvement with increased demonstrations, suggesting potential constraints in its ability to utilize contextual information effectively.

-

In regular arithmetic, models generally improved with more demonstrations, with Mamba-7b and Mamba-2.8b particularly benefiting from true label demonstrations.

-

Jumbled arithmetic revealed a stark contrast in model performance with CoT prompting, where Mistral-7b excelled significantly, indicating its strong capability to leverage additional contextual cues.

-

Sentiment analysis tasks highlighted that all models benefited from demonstrations, especially with true labels. CoT prompting notably enhanced performance, with Cerebras-btlm-3b and Llama2-7b showing considerable gains.

-

Demonstrations with random labels generally improved model performance but to a lesser extent compared to demonstrations with true labels.